Building an AI-Powered Data Analysis Agent: A Deep Dive into Daytona, Fetch.ai, and Autonomous Agent Systems

Building an AI-Powered Data Analysis Agent: A Deep Dive into Daytona, Fetch.ai, and Autonomous Agent Systems

INTRODUCTION

In the era of AI and autonomous systems, the ability to create intelligent agents that can securely execute code, analyze data, and generate insights autonomously is revolutionizing how we approach data science and automation. In this article, we'll explore how I built a data summarization assistant that combines several cutting-edge technologies: Daytona for secure sandbox execution, Fetch.ai's uAgents framework for autonomous agent communication, Agentverse for agent orchestration, and ASI API for intelligent summarization.

This project demonstrates a complete end-to-end system where an AI agent receives data requests, spins up isolated execution environments, performs comprehensive data analysis, generates visualizations, and delivers beautiful web reports—all autonomously and securely.

THE PROBLEM: WHY AUTONOMOUS DATA ANALYSIS AGENTS?

Traditional data analysis workflows require:

- Manual setup of analysis environments

- Writing custom scripts for each dataset

- Managing dependencies and runtime environments

- Ensuring security when executing untrusted code

- Creating visualization dashboards manually

Our solution automates all of this. An agent receives a data URL (CSV, JSON, or Google Sheets), automatically:

- Creates a secure sandbox environment

- Downloads and validates the data

- Performs statistical analysis

- Generates visualizations

- Creates a web report

- Returns a preview URL

All of this happens in seconds, with zero manual intervention.

TECHNOLOGY STACK OVERVIEW

Our architecture leverages four key technologies:

- Daytona: Secure sandbox execution platform

- Fetch.ai uAgents: Autonomous agent framework

- Agentverse: Agent discovery and orchestration platform

- ASI API: Large Language Model for intelligent summarization

Let's dive deep into each component.

PART 1: DAYTONA - SECURE SANDBOX EXECUTION

What is Daytona?

Daytona is a cloud-native development environment platform that provides secure, isolated sandboxes for code execution. Think of it as Docker containers on steroids—each sandbox is a fully isolated Linux environment with its own file system, network, and compute resources.

Why Daytona for Agent Execution?

When building autonomous agents that execute code, security is paramount. You can't trust arbitrary code execution on your own infrastructure. Daytona solves this by:

- Isolation: Each sandbox is completely isolated from your host system

- Ephemeral: Sandboxes can be created and destroyed on-demand

- Preview URLs: Automatic HTTPS endpoints for web applications

- API-First: Programmatic control via Python SDK

- Resource Management: Automatic cleanup and resource limits

How We Use Daytona in Our Agent

In our data analysis agent, Daytona serves as the execution engine:

# Create a sandbox daytona = Daytona(DaytonaConfig(api_key=daytona_api_key)) sandbox = daytona.create() # Upload our Flask app sandbox.fs.upload_file(flask_code.encode(), "app.py") # Install dependencies sandbox.process.execute_session_command( exec_session_id, SessionExecuteRequest(command="pip install flask pandas matplotlib", run_async=False) ) # Start the Flask app sandbox.process.execute_session_command( exec_session_id, SessionExecuteRequest(command="python3 app.py", run_async=True) ) # Get preview URL preview_info = sandbox.get_preview_link(3000)

The workflow:

- Agent receives data URL

- Creates Daytona sandbox (isolated environment)

- Downloads and analyzes data locally

- Generates Flask app with results

- Uploads app to sandbox

- Installs dependencies in sandbox

- Runs Flask app in sandbox

- Returns preview URL to user

This entire process takes 30-45 seconds, and the sandbox can be destroyed after use, ensuring no data leakage or resource waste.

Key Daytona Features We Leverage:

- File System Operations: Upload code, data files, and assets

- Process Management: Execute commands, create sessions, run long-lived processes

- Network Access: Sandboxes can make outbound HTTP requests (to download data)

- Preview Links: Automatic HTTPS URLs for web apps running in sandboxes

- Session Management: Persistent execution contexts for multi-step operations

Security Benefits:

- Code runs in complete isolation

- No access to host file system or network

- Automatic resource limits

- Ephemeral by default (destroy after use)

- Network isolation (only outbound HTTP allowed)

PART 2: FETCH.AI AND UAGENTS FRAMEWORK

What is Fetch.ai?

Fetch.ai is building an open, permissionless, decentralized network for autonomous agents. Their vision is to create an "economy of things" where AI agents can autonomously transact, collaborate, and provide services.

🌍 Fetch.ai Ecosystem

Fetch.ai offers a powerful, comprehensive platform for creating, deploying, and connecting autonomous agents. The ecosystem consists of four key components that work together seamlessly:

-

uAgents Framework: A lightweight Python framework for building agents with built-in identity, messaging, and protocol support. It provides everything you need to create autonomous agents that can communicate, store state, and execute complex workflows.

-

Agentverse: An open marketplace where agents can be registered, discovered, and utilized. Think of it as the "App Store" for autonomous agents—developers publish their agents, and users can discover and interact with them through a unified interface.

-

ASI One LLM: Fetch.ai's Web3-native Large Language Model designed specifically for agentic workflows. What makes ASI unique is its capability to discover agents on Agentverse autonomously, enabling LLM-powered agents to find and collaborate with other agents dynamically.

-

Chat Protocol: A standardized communication protocol for conversational interactions between agents. This protocol ensures interoperability—any agent implementing the chat protocol can communicate with any other agent, regardless of who built it.

Together, these components create a complete ecosystem where:

- Developers can easily build agents using uAgents

- Agents can be discovered and used via Agentverse

- LLM-powered agents can autonomously find and use other agents via ASI

- All agents can communicate using standardized protocols

The uAgents Framework

uAgents is Fetch.ai's Python framework for building autonomous agents. It provides:

- Agent Identity: Each agent has a unique address (like a blockchain address)

- Message Protocols: Standardized communication protocols

- Mailbox: Reliable message delivery system

- Protocol Handlers: Event-driven message processing

- Context Management: Stateful agent execution

Our Agent Architecture

Our data summarization agent is built using uAgents:

from uagents import Agent, Context, Protocol from uagents_core.contrib.protocols.chat import ( ChatMessage, ChatAcknowledgement, TextContent, chat_protocol_spec, ) agent = Agent( name="data-summarization-agent", seed="data-summarization-agent-seed-daytona", port=8000, mailbox=True, ) protocol = Protocol(spec=chat_protocol_spec) @protocol.on_message(ChatMessage) async def handle_message(ctx: Context, sender: str, msg: ChatMessage): # Process incoming chat message # Extract data URL from message # Trigger data analysis # Reply with preview URL

Key uAgents Concepts:

- Agent: The autonomous entity with its own identity

- Protocol: Communication specification (we use the chat protocol)

- Context: Execution context with logging, storage, and messaging

- Message Handlers: Async functions that process incoming messages

- Mailbox: Ensures reliable message delivery even if agent is offline

How Messages Flow:

- User sends ChatMessage to agent address

- Agent receives message via mailbox

handle_messagehandler processes the message- Agent sends acknowledgement

- Agent processes request (creates sandbox, analyzes data)

- Agent sends reply with results

The Chat Protocol:

The Chat Protocol is a standardized communication protocol for conversational interactions between agents. It's one of the core protocols in the Fetch.ai ecosystem, ensuring seamless interoperability.

Protocol Components:

- ChatMessage: Contains text content, timestamp, message ID, and sender information

- ChatAcknowledgement: Confirms message receipt with acknowledged message ID

- TextContent: Plain text message parts that can be combined for rich content

Why Standardization Matters:

This standardization is crucial for the Fetch.ai ecosystem because:

- Interoperability: Any agent speaking the chat protocol can communicate with any other agent, regardless of who built it

- Network Effects: As more agents adopt the protocol, the entire network becomes more valuable

- Composability: Agents can be easily combined into complex workflows

- Discovery: Agents can be discovered and used through Agentverse using standard protocols

- Future-Proof: Standard protocols ensure agents remain compatible as the ecosystem evolves

In our project, we use the chat protocol to enable conversational interactions with our data analysis agent, making it accessible to any other agent or user interface that supports the protocol.

PART 3: AGENTVERSE - AGENT DISCOVERY AND ORCHESTRATION

What is Agentverse?

Agentverse is Fetch.ai's open marketplace where agents can be registered, discovered, and utilized. Think of it as the "App Store" or "Service Directory" for autonomous agents—a central hub where the agent economy comes to life.

Key Features:

- Agent Discovery: Find agents by capability, service type, or use case

- Agent Registry: Public directory of available agents with search and filtering

- Service Descriptions: Agents advertise their capabilities, pricing, and usage instructions

- Network Effects: As more agents join, the platform becomes more valuable for everyone

- Integration: Seamless integration with uAgents framework and ASI LLM

- User Interface: Web-based UI for discovering and interacting with agents

- Agent-to-Agent Discovery: Agents can discover and use other agents programmatically

How Our Agent Fits In:

Our data summarization agent can be:

- Published to Agentverse: Other agents can discover and use it

- Discovered by Users: Users can find our agent via Agentverse UI

- Composed with Other Agents: Combined with other agents for complex workflows

Example Workflow:

- User searches Agentverse for "data analysis"

- Finds our agent

- Connects to agent via chat protocol

- Sends data URL

- Receives analysis results

Future Possibilities:

- Agent Composition: Our agent could call other agents (e.g., a visualization specialist agent)

- Service Marketplace: Agents could charge for services using Fetch.ai tokens

- Autonomous Collaboration: Agents could autonomously form teams to solve complex problems

PART 4: ASI API - INTELLIGENT SUMMARIZATION

What is ASI?

ASI (Autonomous Systems Intelligence) One LLM is Fetch.ai's Web3-native Large Language Model designed specifically for agentic workflows. Unlike traditional LLMs, ASI is built with agent autonomy in mind and has the unique capability to discover agents on Agentverse autonomously.

Key Features of ASI:

- Web3-Native: Built specifically for decentralized agent networks

- Agent Discovery: Can autonomously discover and interact with agents on Agentverse

- Agentic Workflows: Optimized for multi-agent collaboration scenarios

- Standard API: Compatible with OpenAI-style API for easy integration

Why ASI for Summarization?

While our data analyzer generates comprehensive statistical summaries, ASI adds:

- Natural Language: Converts technical stats into readable insights

- Contextual Understanding: Identifies patterns and trends

- Conciseness: Distills complex analysis into key points

- Human-Friendly: Explains insights in plain language

- Agent-Aware: Can potentially discover and use other agents for enhanced analysis

Future Possibilities with ASI:

In future iterations, our agent could leverage ASI's agent discovery capabilities to:

- Find specialized analysis agents on Agentverse

- Delegate specific analysis tasks to expert agents

- Compose multi-agent workflows autonomously

- Collaborate with other agents for complex data analysis

Integration in Our Agent:

def get_asi_llm_summary(api_key: str, content: str) -> str: """Use ASI LLM to refine/shorten the summary text""" headers = { "Content-Type": "application/json", "Authorization": f"Bearer {api_key}" } payload = { "model": "asi1-mini", "messages": [ { "role": "system", "content": "You are a data analyst. Summarize data insights clearly and concisely (5-8 bullet points)." }, { "role": "user", "content": f"Summarize these dataset insights:\n\n{content}" } ] } response = requests.post( "https://api.asi1.ai/v1/chat/completions", json=payload, headers=headers, timeout=30 ) return response.json()["choices"][0]["message"]["content"]

How It Works:

- Data analyzer generates technical summary (statistics, insights)

- Technical summary sent to ASI API

- ASI LLM converts to natural language

- Refined summary included in agent response

Example Transformation:

Before (Technical):

Dataset summary:

- Rows: 1,234

- Columns: 7

- Numeric columns: Sales, Revenue, Units_Sold

- Missing cells: 45 (0.52%)

- Sales: mean 1450.50, median 1500.00, range 500.00-2700.00

After (ASI-Enhanced):

📊 Dataset Overview:

• Analyzed 1,234 records across 7 dimensions

• Sales performance shows strong consistency (median: $1,500)

• Revenue ranges from $500 to $2,700 with minimal missing data (0.52%)

• Key metrics indicate healthy distribution with no significant outliers

The ASI integration is optional—if no API key is provided, the agent still works with technical summaries.

PART 5: TECHNICAL ARCHITECTURE - HOW IT ALL WORKS TOGETHER

System Architecture

Our system consists of two main components:

- agent.py: The uAgents chat agent

- data_analyzer.py: Core data processing and sandbox management

Data Flow:

User → ChatMessage → uAgents Agent → Data Analyzer → Daytona Sandbox → Flask App → Preview URL → User

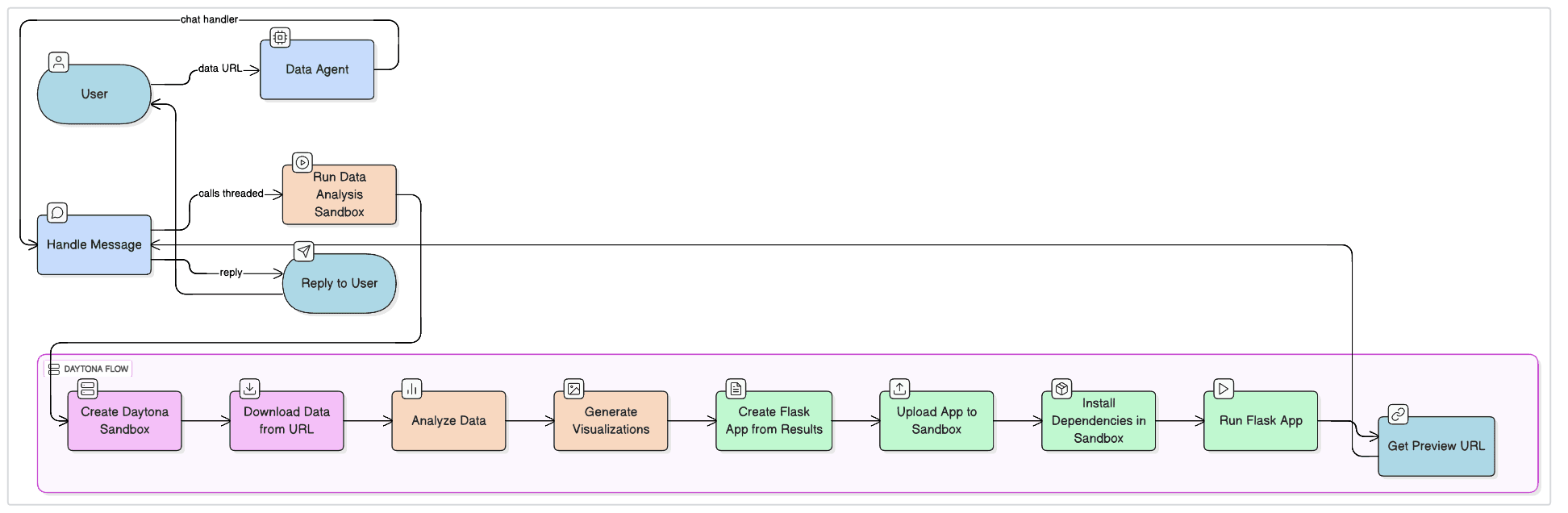

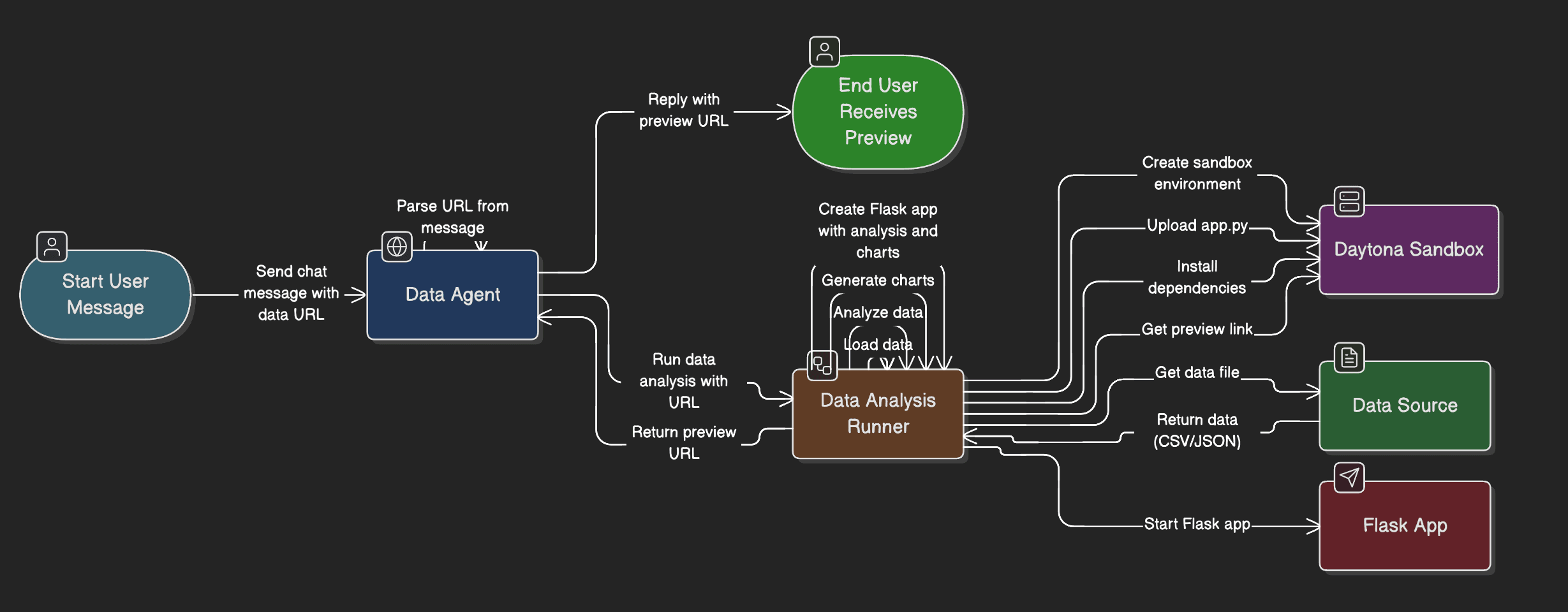

Visual Architecture Diagrams:

Daytona Flow - System Workflow:

Daytona Flow - Detailed Process:

Detailed Workflow:

The complete workflow begins with Message Reception in the agent.py file. When a user sends a chat message containing a data URL or raw data, the agent receives it through the uAgents mailbox system. The agent extracts the text content from the message and immediately sends an acknowledgement back to the user, confirming that the request has been received and is being processed.

Once the message is received, the system moves to Data Processing handled by data_analyzer.py. This component is responsible for parsing various input formats including URLs, local file paths, or raw text data. If a URL is provided, the system downloads the data automatically. The system also intelligently handles Google Sheets URLs by converting them to CSV export format. Once the data is obtained, it's loaded into a pandas DataFrame for further processing, ensuring that the data is in a structured format that can be easily analyzed.

The Data Analysis phase is where the real intelligence of the system comes into play. The data_analyzer.py module performs comprehensive statistical analysis including calculating mean, median, standard deviation, and other key metrics. It detects missing values and calculates their percentage, providing insights into data quality. The system analyzes column types, identifying numeric, categorical, and complex data types. Based on this analysis, it generates key insights that help users understand their data better.

Following the analysis, the system moves to Visualization Generation. The data_analyzer.py creates various types of visualizations to help users understand their data visually. For numeric columns, it generates histograms that show the distribution of values. For categorical columns, it creates bar charts showing the frequency of different categories. When multiple numeric columns are present, it generates correlation heatmaps that visualize relationships between variables. All charts are saved as PNG files for inclusion in the final report.

The Report Creation phase transforms all the analysis and visualizations into a comprehensive HTML report. The data_analyzer.py formats the statistical analysis, embeds the charts as base64 images directly in the HTML, and creates a complete Flask application that serves this report. This approach ensures that the report is self-contained and doesn't require separate file serving infrastructure.

Sandbox Deployment is where Daytona's power becomes evident. The data_analyzer.py creates a new Daytona sandbox, providing an isolated environment for running the Flask application. It uploads the Flask app code to the sandbox, installs all necessary dependencies including Flask, pandas, matplotlib, and seaborn. Once everything is set up, it starts the Flask app within the sandbox and retrieves a preview URL that provides secure access to the running application.

Finally, Response Generation completes the cycle. The agent.py optionally refines the summary using the ASI API to make it more readable and user-friendly. It formats the reply message with the preview URL and sends it back to the user through the ChatMessage protocol, completing the entire workflow from data URL to interactive web report.

Key Technical Decisions:

Several important technical decisions were made to ensure the system works reliably and efficiently. Async Execution was implemented so that data analysis runs in an executor thread, preventing the agent from being blocked during long-running analysis operations. This ensures that the agent remains responsive and can handle multiple requests concurrently.

Base64 Charts were chosen as the method for embedding visualizations in the HTML report. This approach eliminates the need for separate file serving infrastructure, making the Flask app completely self-contained. All charts are converted to base64 strings and embedded directly in the HTML, which simplifies deployment and ensures that visualizations are always available.

Health Checks are implemented to ensure that the Flask app is fully ready before returning the preview URL to the user. The system waits for the Flask application to start successfully and become responsive before providing the URL, preventing users from accessing incomplete or broken applications.

Error Handling is comprehensive throughout the system. Every step includes proper error catching and handling, with cleanup operations that ensure resources are properly released even when errors occur. This prevents resource leaks and ensures that failed operations don't leave the system in an inconsistent state.

Resource Management is critical for a system that creates and destroys sandboxes dynamically. The system ensures that sandboxes are deleted both after successful completion and when errors occur, preventing resource waste and ensuring that each analysis gets a fresh, clean environment.

PART 6: DATA ANALYSIS CAPABILITIES

Our agent performs comprehensive data analysis that goes far beyond simple data loading. The system is designed to understand data structure, identify patterns, and provide meaningful insights that help users make sense of their information.

Statistical Analysis:

The statistical analysis capabilities are extensive and cover all major aspects of data understanding. Summary Statistics are calculated for all numeric columns, providing mean, median, standard deviation, minimum, and maximum values. These basic statistics give users a quick overview of their numeric data, helping them understand the central tendencies and variability in their datasets.

Distribution Analysis goes deeper, calculating skewness to understand data asymmetry, identifying quartiles to understand data spread, and detecting outliers that might indicate data quality issues or interesting anomalies. This analysis helps users understand not just what their data contains, but how it's distributed and whether there are any unusual patterns.

Missing Data detection is crucial for data quality assessment. The system identifies all missing values, calculates the percentage of missing data for each column, and reports this information clearly. This helps users understand data completeness and make informed decisions about how to handle missing information.

Correlation Analysis examines relationships between numeric variables, calculating correlation coefficients that show how variables relate to each other. This helps users understand dependencies in their data and identify variables that might be redundant or highly related.

Visualization Generation:

Visualizations are automatically generated to help users understand their data at a glance. Histograms are created for numeric columns, showing the distribution of values and helping users understand data spread and patterns. The system generates histograms for up to 5 numeric columns, ensuring that users get visual insights without overwhelming them with too many charts.

Bar Charts are created for categorical columns, showing the frequency of different categories and helping users understand which categories are most common. The system generates bar charts for up to 3 categorical columns, focusing on the most important categorical data in the dataset.

Correlation Heatmaps provide a visual representation of relationships between numeric variables. These heatmaps use color coding to show correlation strength, making it easy to identify strongly correlated variables at a glance. This visualization is particularly valuable for understanding complex relationships in multi-variable datasets.

Data Type Handling:

The system intelligently handles different data types, applying appropriate analysis methods for each type. Numeric columns receive full statistical analysis including all summary statistics, distribution analysis, and correlation calculations. This comprehensive analysis ensures that numeric data is thoroughly understood.

Categorical columns are analyzed differently, with value counts showing frequency distributions, unique value identification, and most common value reporting. This analysis helps users understand categorical distributions and identify dominant categories in their data.

Complex Types like dictionaries and lists are identified but not deeply analyzed, as they require specialized processing that would be beyond the scope of automated analysis. However, the system reports their presence so users know they exist in the dataset.

Missing Values are detected and reported for all data types, providing a comprehensive view of data completeness across the entire dataset. This information is crucial for data quality assessment and helps users make informed decisions about data cleaning and preprocessing.

Supported Data Sources:

- CSV URLs: Any publicly accessible CSV file

- JSON URLs: API endpoints or JSON files

- Google Sheets: Automatic CSV export URL conversion

- Raw CSV Text: Paste CSV directly in chat

- Raw JSON Text: Paste JSON directly in chat

- Local Files: Absolute file paths

Google Sheets Integration:

Our agent automatically handles Google Sheets URLs:

if 'docs.google.com' in url and '/spreadsheets/' in url: # Extract spreadsheet ID spreadsheet_id = extract_id(url) # Convert to CSV export format url = f"https://docs.google.com/spreadsheets/d/{spreadsheet_id}/export?format=csv&gid={gid}"

This allows users to simply share a Google Sheets link, and the agent handles the rest.

PART 7: WEB REPORT GENERATION

The Flask App:

Our agent generates a complete Flask web application:

from flask import Flask app = Flask(__name__) @app.route('/') def analysis(): html_content = """<!DOCTYPE html>...""" return html_content

HTML Report Features:

- Modern Design: Gradient background, card-based layout

- Responsive: Works on desktop, tablet, and mobile

- Interactive: Hover effects, smooth transitions

- Comprehensive: All analysis results in one place

- Visual: Embedded charts and visualizations

Report Sections:

- Header: Title and branding

- Data Source: Link or preview of input data

- Data Overview: Rows, columns, column names

- Summary Statistics: Statistical tables for numeric columns

- Missing Values: Report of missing data

- Key Insights: Bulleted list of insights

- Visualizations: All generated charts

- Footer: Attribution and branding

The HTML is completely self-contained—all CSS and images are embedded, so the Flask app is just a single file.

PART 8: USE CASES AND APPLICATIONS

Real-World Applications:

-

Business Intelligence:

- Sales data analysis

- Revenue trend analysis

- Performance metrics tracking

-

Data Science:

- Exploratory data analysis

- Quick data profiling

- Dataset validation

-

Research:

- Experimental data analysis

- Survey result processing

- Research data summarization

-

Automation:

- Scheduled data reports

- Automated insights generation

- Data quality monitoring

-

Education:

- Teaching data analysis

- Student project analysis

- Research assistance

Integration Scenarios:

- Slack Bot: Agent receives data URLs via Slack

- Email Agent: Processes data from email attachments

- API Service: REST API wrapper around agent

- Workflow Automation: Part of larger automated workflows

- Multi-Agent Systems: Collaborates with other agents

PART 9: SECURITY AND BEST PRACTICES

Security Considerations:

- Sandbox Isolation: All code execution in isolated Daytona sandboxes

- No Host Access: Sandboxes cannot access host file system

- Network Isolation: Only outbound HTTP allowed

- Ephemeral Execution: Sandboxes destroyed after use

- API Key Management: Keys stored in environment variables

Best Practices:

- Error Handling: Comprehensive try-catch blocks

- Resource Cleanup: Always delete sandboxes after use

- Input Validation: Validate data URLs and formats

- Timeout Management: Set timeouts for HTTP requests

- Logging: Comprehensive logging for debugging

Limitations:

- Public Data Only: URLs must be publicly accessible

- Sandbox Lifetime: Sandboxes may timeout after inactivity

- Resource Limits: Large datasets may take longer

- Network Dependency: Requires internet for data download

PART 10: FUTURE ENHANCEMENTS

Potential Improvements:

- More Visualizations: Scatter plots, line charts, box plots

- Advanced Analytics: Machine learning insights, anomaly detection

- Data Export: Download analysis results as PDF or Excel

- Scheduled Reports: Automatic periodic analysis

- Multi-Agent Collaboration: Specialized agents for different analysis types

- Custom Dashboards: User-configurable report templates

- Real-Time Analysis: Streaming data analysis

- Database Integration: Direct database connections

Agent Network Expansion:

- Visualization Specialist Agent: Dedicated chart generation

- Statistical Analysis Agent: Advanced statistical methods

- Data Cleaning Agent: Pre-processing and data quality

- Report Generation Agent: Custom report formatting

CONCLUSION

This project demonstrates the power of combining modern technologies:

- Daytona provides secure, isolated execution environments

- Fetch.ai uAgents enables autonomous agent communication

- Agentverse facilitates agent discovery and collaboration

- ASI API adds intelligent summarization capabilities

Together, they create a complete autonomous data analysis system that:

- Receives data requests

- Securely executes analysis code

- Generates comprehensive reports

- Delivers results via web interface

The Future of Autonomous Agents:

As agent frameworks mature and sandbox technologies improve, we'll see more autonomous systems that can:

- Execute complex workflows

- Collaborate with other agents

- Provide services autonomously

- Operate in decentralized networks

Our data summarization agent is just the beginning. The combination of secure execution (Daytona), agent frameworks (Fetch.ai), and AI capabilities (ASI) opens up endless possibilities for autonomous systems.

GETTING STARTED

To build your own agent:

-

Get API Keys:

- Daytona API key from daytona.io

- ASI API key from asi1.ai (optional)

- Fetch.ai account for Agentverse (optional)

-

Install Dependencies:

pip install uagents daytona-sdk pandas matplotlib seaborn flask requests python-dotenv -

Set Environment Variables:

export DAYTONA_API_KEY=your_key export ASI_API_KEY=your_key # optional -

Run the Agent:

python agent.py -

Send Data:

- Via uAgents chat protocol

- Or modify for your use case

The code is open source and available on GitHub. Feel free to fork, modify, and extend it for your needs!

RESOURCES

- Daytona: https://www.daytona.io

- Fetch.ai: https://fetch.ai

- uAgents: https://github.com/fetchai/uAgents

- Agentverse: https://agentverse.ai

- ASI API: https://asi1.ai

- Project Repository: https://github.com/gautammanak1/Data-Summarization-fetchai-daytona

ABOUT THE AUTHOR

This project demonstrates the integration of cutting-edge technologies for autonomous agent systems. The combination of secure sandbox execution, agent frameworks, and AI capabilities represents the future of automated data analysis and intelligent systems.

For questions, contributions, or collaborations, please reach out via the project repository.